i. Contents

| I. |

Purpose |

| II. | General

Priorities |

| III. | Design

Outline |

| IV. | Design

Issues |

| V. | Design

Details |

I. Purpose

This document

descrtibes the design of the first phase of the DTPF in accordance to

the project requirements.

The first

phase will involve implementing the core framework for off-line (as

opposed to real-time) processing. This phase will also not emphasize

graphical user interface (GUI) aspects, though it will provide features

for later GUI integration. For further background information and a

listing of the requirements the reader is referred to the project requirements

document.

II. General Priorities

The priorities guiding the design of

this first phase of the DTPF project are focused on the the project

implementors. In rough order of precedence these are:

- Maintainability: This project will continue to be developed for some time. After the first phase a certain ammount of application software will depend upon the DTPF and modifications will need to be made with minimal effect on this application software. New programmers may also become involved in the work. Modifications and enhancements are also likely to occur some time after the initial implementation when original implementors have moved on or are unavailable. It is also expected that the effective working knowledge of those familiar with the framework but not actively working on it will decline with time. The architecture and design need to be documented with these considerations in mind. See: Non-Functional Requirements, Section V.A.

- Usability: Here

we are mainly concerned with usability regarding

the API presented to application developers using the DTPF to create

new software. Usability concerns for end-users will become more of a

concern as this first phase nears completion and detailed planning of

later phases begins. Regarding usability, the API must be complete

enough to satisfy the requirements, allowing client program code access

to framework services. The API must also allow for the creation of

nodes implementing sensory model components with substantially less

effort than developing custom application software as discussed in the

requirements background

information. See: Functional

Requirements, all sections.

- Extensibility: Future plans for interacting with hardware (e.g., video capture, motor control) will require additions beyond this first phase. The design needs to take this into account and not be specialized to the offline situation. See: Non-Functional Requirements, Section V.E.

- Efficiency: As the first phase of the project is to support offline processing, free of real-time constraints, efficiency is of less concern. Reasonable care should be taken to not consume excessive resources for the framework itself to avoid starving the actual processing and to facilitate future application to more time-bound situations. See: Non-Functional Requirements, Section V.F.

- Portability: Portability is a lesser concern here. However the design should identify platform-dependent components and provide appropriate abstraction to shield the DTPF from platform dependencies. See: Non-Functional Requirements, Section V.D.

III. Design Outline

High-level description of the design

and architecture.

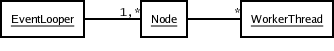

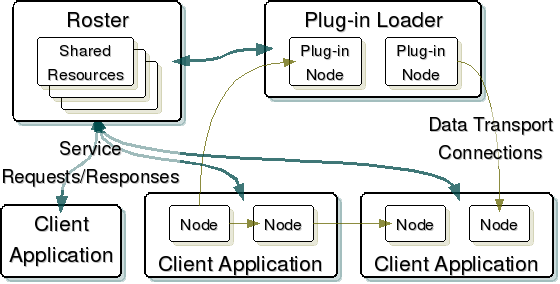

The architectural model adopted is roughly that of the BeOS MediaKit. Processing is organized into nodes which can be connected together to send data from one to another. Node execution is event based and multi-threaded (e.g., every node has an associated thread for event handling). Nodes can be implemented as application classes that are linked with a client application at compile-time, or nodes can be implemented as plug-ins that are be dynamically linked at run-time. A roster server application (provided with DTPF) maintains bookkeeping information and records of framework resources (e.g., available nodes, potential connection endpoints, connections between nodes), is responsible for creating and controlling certain resources (e.g., shared memory buffers available to separate operating system processes), and acts as a broker for client requests for framework services. A client interface allows program code (node implementations or client applications) to communicate with the roster. A plug-in loader application (provided with DTPF) loads nodes implemented as plug-ins in dynamically linked shared libraries.

|

| Figure 1: A top-level view of

the DTPF

architectural model. Note that the connections shown between nodes are

illustrative of a hypothetical situation. In general any node can be

connected to any other. |

The architectural model adopted is roughly that of the BeOS MediaKit. Processing is organized into nodes which can be connected together to send data from one to another. Node execution is event based and multi-threaded (e.g., every node has an associated thread for event handling). Nodes can be implemented as application classes that are linked with a client application at compile-time, or nodes can be implemented as plug-ins that are be dynamically linked at run-time. A roster server application (provided with DTPF) maintains bookkeeping information and records of framework resources (e.g., available nodes, potential connection endpoints, connections between nodes), is responsible for creating and controlling certain resources (e.g., shared memory buffers available to separate operating system processes), and acts as a broker for client requests for framework services. A client interface allows program code (node implementations or client applications) to communicate with the roster. A plug-in loader application (provided with DTPF) loads nodes implemented as plug-ins in dynamically linked shared libraries.

IV. Design Issues

Important design issues that have been

or need to be resolved.

| IV.A: |

Issue:

Foundational code and system dependent functionality

Details:

Proposal:

|

||

| IV.A.1 |

Issue:

Management of operating system resources. Details:

Proposal:

|

||

| IV.B: | Issue:

Details:

Proposal:

|

||

| IV.C: | Issue:

Details: Proposal: |

||

| IV.D: |

Issue:

Details: Proposal: |

||

| IV.E: | Issue:

Details: Proposal: |

||

| IV.F: |

Issue:

Details: Proposal:

|

||

| IV.G: |

Issue:

Details: Proposal: |

||

| IV.H: |

Issue:

Details:

Proposal:

|

||

| Issue: Details: Proposal: |

V. Design Details

Other details the reader will need to

know that have not yet been mentioned will be added here.